How does ChatGPT actually work?

ChatGPT is a chatbot based on a large language model (either GPT-3.5 or GPT-4). Under the hood, ChatGPT is a pretrained neural network that has been trained on an impressive amount of publicly available text data from the internet.

This vast amount of data enables the model to learn patterns, grammar, context, and even some common sense knowledge, allowing it to generate meaningful and coherent responses to user queries. ChatGPT has the unique ability to engage in interactive and dynamic conversations with users, making it a promising tool for a wide range of applications.

What is a Large Language Model

Large language models are AI algorithms that use deep learning techniques known as natural language processing in order to read, understand, generate, and predict text.

When you type in a prompt, the model doesn’t search for the answer on the Internet, it gives you the answer off the top of its head (although it doesn’t have any head). It generates a response one word at a time, determining every next word based on probabilities derived from the text data it was trained on and the text it has generated so far.

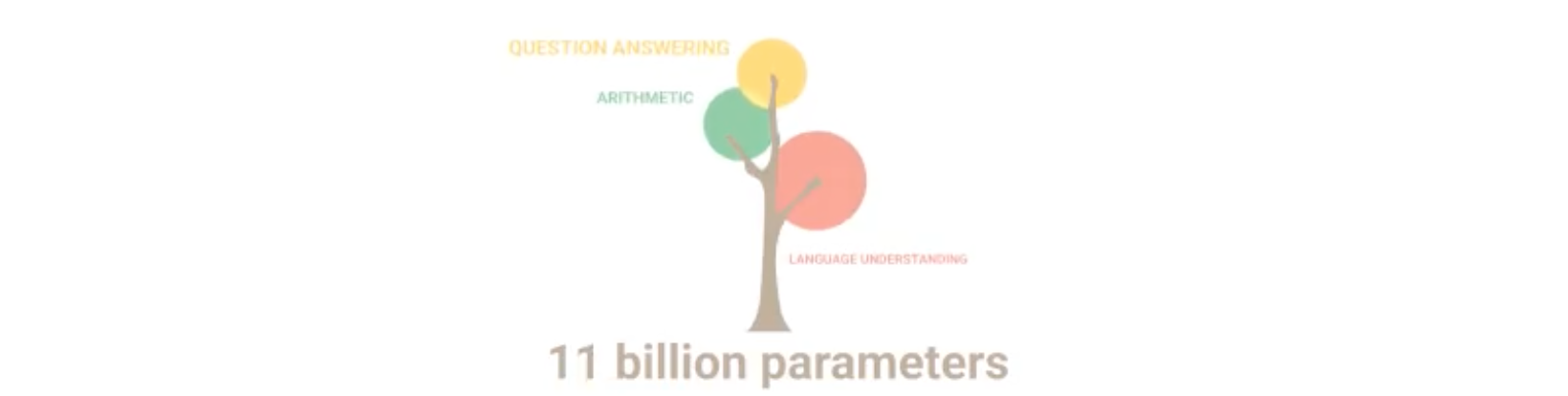

11 billion parameters: question answering, arithmetic, language understanding

How is that possible? Well, ChatGPT doesn’t need to search the Internet for information, because it already knows everything (well, almost). All the knowledge available on the Internet is incorporated into ChatGPT through its 175 billion parameters.

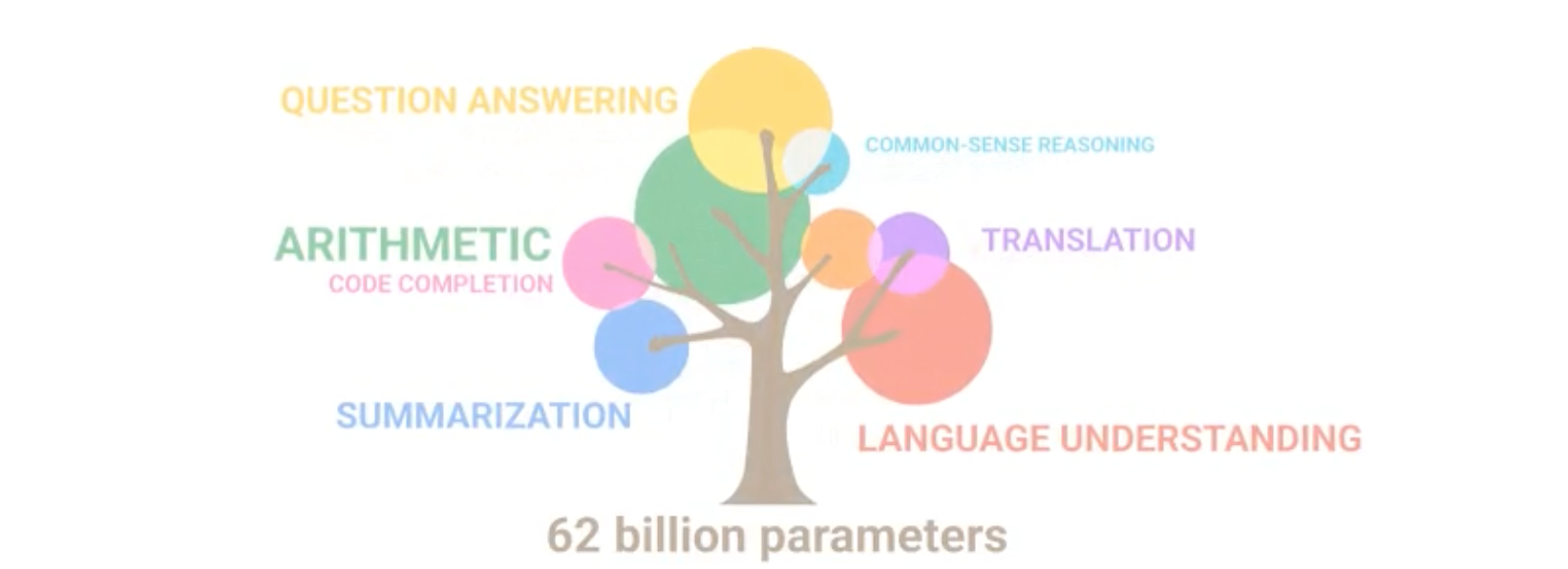

62 billion parameters: translation, common-sense reasoning, code completion

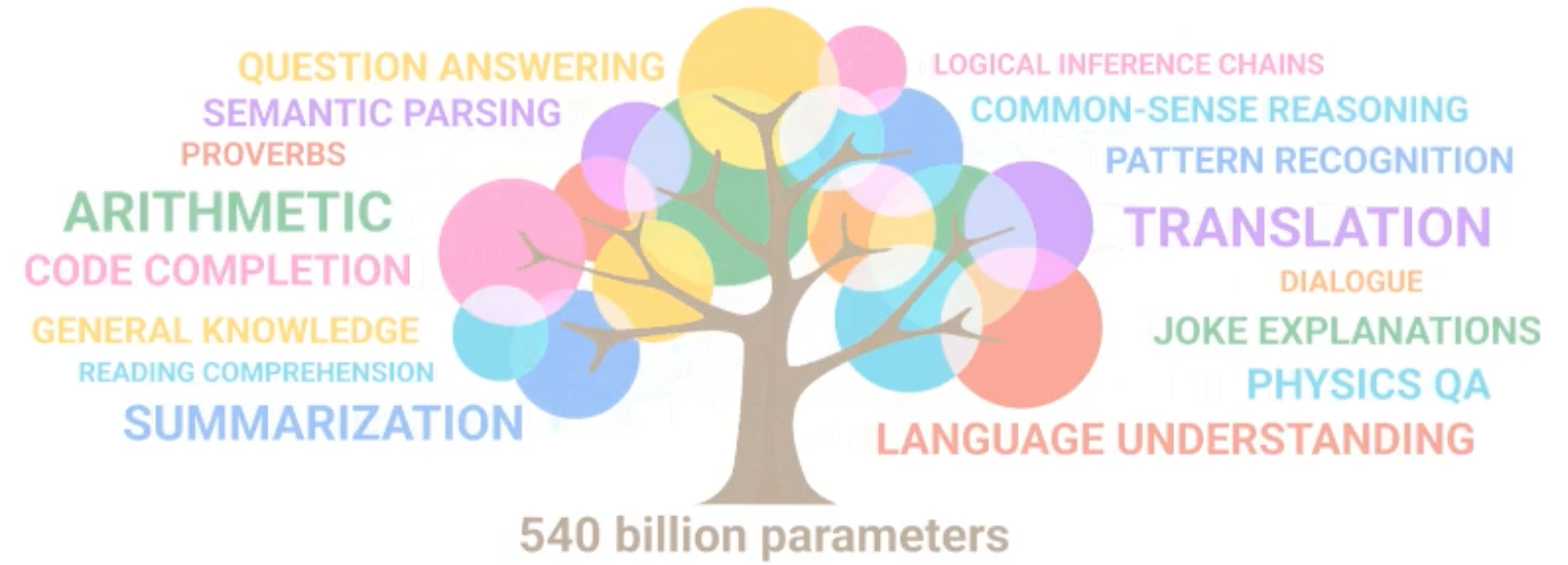

As the number of model parameters increases, new abilities emerge in the model that were not intentionally designed by anyone.

540 billion parameters: logical inference chains, pattern recognition, reading comprehension

How ChatGPT was trained

ChatGPT was trained on hundreds of thousands of books, articles, dialogues, including:

- WebText2 (a large library of over 45 terabytes of text data)

- Cornell Movie Dialogs Corpus (a dataset containing over 200,000 conversations between 10,000 movie characters in movie scripts)

- Ubuntu Dialogue Corpus (a collection of 1,000,000 multi-turn dialogues between Ubuntu users and the community support team)

- billions of lines of code from GitHub

First, GPT was allowed to process all the data it had access to without any human guidance, enabling it to independently grasp the regulations and connections that dictate the realm of text (that is what’s called “unsupervised learning”).

Then, to fine-tune the language model a technique called reinforcement learning with human feedback (RLHF) was applied:

- Human AI trainers conducted conversations where they played both sides—the user and an AI assistant. They had access to model-written suggestions to help compose responses. The model was trained using supervised fine-tuning to predict the assistant's next message given the dialogue history.

- To create a reward model for reinforcement learning, comparison data was collected. AI trainers ranked multiple model responses by quality, considering things like whether the response made sense and if it was helpful. The selected responses became a dialogue dataset with new model-written messages.

- A reward model was created using a technique called reward modeling, where a model was trained to predict the quality of a response based on the comparison data collected in the previous step.

In the end, ChatGPT has learned how to respond in any given situation, give precise and relevant answers, and evade potentially harmful topics.

Transformer architecture

The training process of ChatGPT involves predicting the next word in a sentence given the previous words. To achieve this, a Transformer architecture is employed (by the way, T in ChatGPT stands for Transformer), which is made up of layers of self-attention mechanisms. Self-attention allows the model to weigh different words in a sentence based on their importance and relevance to predict the next word accurately.

Older recurrent neural networks (RNNs) read text from left-to-right. While this works well when related words are adjacent, it becomes challenging when they are at opposite ends of a sentence.

Therefore, when an RNN was working with a one-page text, by the middle of the third paragraph, it would already “forget” what was at the very beginning.

In contrast, transformers are capable of simultaneously processing every word in a sentence and comparing each word to all others. This enables them to focus their "attention" on the most relevant words, regardless of their position within the input sequence.

Tokenization

It is important to note that transformers do not operate on individual words (they can’t read like humans). Instead, the input text is split into individual tokens, including words, punctuation marks, and special tokens. Tokens in ChatGPT are which are text chunks represented as vectors (numbers with direction and position).

The proximity of token-vectors in space determines their level of association: the closer they are, the more related they are. Furthermore, attention is encoded as a vector, enabling transformer-based neural networks to retain crucial information from preceding parts of a paragraph.

When a user interacts with ChatGPT, the model receives the conversation history as input, including both user prompts and model-generated responses. The input is tokenized and then fed into the neural network. Each token is associated with an embedding that represents its meaning in the context of the conversation.

GPT-3 was trained on roughly 500 billion tokens, which allows its language models to more easily assign meaning and predict plausible follow-on text by mapping them in vector-space. Many words map to single tokens, though longer or more complex words often break down into multiple tokens. On average, tokens are roughly four characters long.

During the inference stage, where the model generates responses, a process known as autoregression is used. This means that the model predicts one word at a time while conditioning on the conversation history and previously generated words. To ensure the generated response is coherent and relevant, techniques like top-p sampling and temperature scaling are utilized.

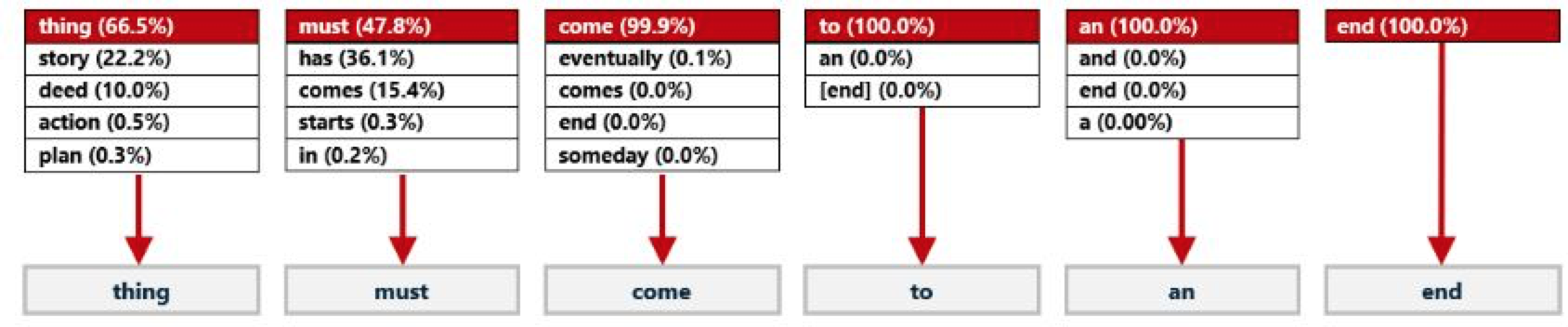

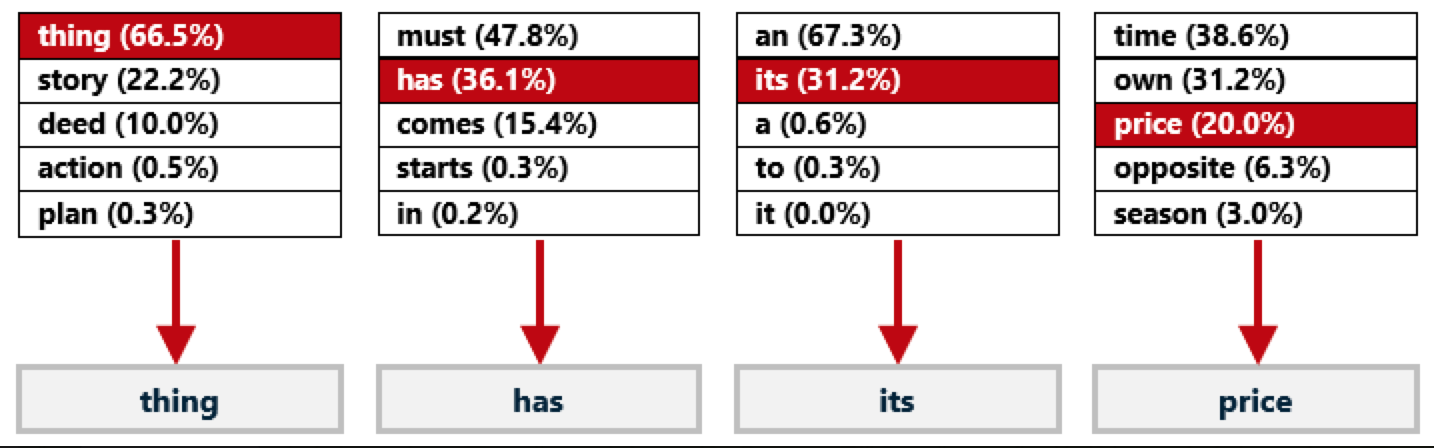

In short, the top-p parameter gives the model a pool of options (tokens) to choose from, while the temperature determines the probability of choosing a certain token. When the temperature is set to 0, the model is going to pick only the most “popular” tokens (words that are most often found together in the text data ChatGPT was trained):

That is not always great. Higher temperatures make outcomes more diverse:

More on ChatGPT parameters: https://talkai.info/blog/understanding_chatgpt_settings/