From Science Fiction to Reality: The Real Dangers of AI

The rise of artificial intelligence represents a significant technological breakthrough that is poised to revolutionize society much like the Internet, personal computers, and cell phones have done. Its impact is pervasive, infiltrating various aspects of human life from work and education to leisure activities. The rapid advancement of neural networks is causing some concern, prompting us to explore the potential dangers that artificial intelligence may pose to humanity in this article.

Is AI dangerous? Who voiced concerns?

In sci-fi movies, the idea of an uncontrollable artificial intelligence bent on dominating or destroying humanity is a popular theme, as seen in films like "The Matrix" and "The Terminator". With the rapid pace of technological advancement today, it can be challenging for the average person to keep up. The swift progress of AI is causing our societies to adapt quickly, prompting fears due to the complexity of these technologies and the innate human fear of the unknown.

Not only are ordinary individuals feeling anxious about AI, but experts in the field are also expressing their concerns. For instance, Geoffrey Hinton, often referred to as "the godfather of AI", has voiced his own apprehensions:

These things could get more intelligent than us and could decide to take over, and we need to worry now about how we prevent that happening.

I thought for a long time that we were, like, 30 to 50 years away from that. So I call that far away from something that's got greater general intelligence than a person. Now, I think we may be much closer, maybe only five years away from that.

There's a serious danger that we'll get things smarter than us fairly soon and that these things might get bad motives and take control.

On March 22, 2023, an open letter was published calling for a halt in the development of artificial intelligence more powerful than GPT-4 for a period of six months:

Contemporary AI systems are now becoming human-competitive at general tasks, and we must ask ourselves: Should we let machines flood our information channels with propaganda and untruth? Should we automate away all the jobs, including the fulfilling ones? Should we develop nonhuman minds that might eventually outnumber, outsmart, obsolete and replace us? Should we risk loss of control of our civilization? Such decisions must not be delegated to unelected tech leaders. Powerful AI systems should be developed only once we are confident that their effects will be positive and their risks will be manageable. This confidence must be well justified and increase with the magnitude of a system’s potential effects.

The letter was signed by 1800 leaders of tech companies, 1500 professors, scholars, and researchers in the field of AI:

- Elon Musk, CEO of SpaceX, Tesla & Twitter

- Steve Wozniak, Co-founder, Apple

- Emad Mostaque, CEO, Stability AI

- Jaan Tallinn, Co-Founder of Skype, Centre for the Study of Existential Risk, Future of Life Institute

- Evan Sharp, Co-Founder, Pinterest

- Craig Peters, CEO, Getty Images

- Mark Nitzberg, Center for Human-Compatible AI, UC Berkeley, Executive Director

- Gary Marcus, New York University, AI researcher, Professor Emeritus

- Zachary Kenton, DeepMind, Senior Research Scientist

- Ramana Kumar, DeepMind, Research Scientist

- Michael Osborne, University of Oxford, Professor of Machine Learning

- Adam Smith, Boston University, Professor of Computer Science, Gödel Prize, Kanellakis Prize

In total, more than 33,000 signatures were collected.

Other notable figures, such as Sam Altman (CEO, OpenAI), Geoffrey Hinton (Turing Award Winner), Dario Amodei (CEO, Anthropic), and Bill Gates, as well as over 350 executives and AI researchers signed the following statement:

Mitigating the risk of extinction from A.I. should be a global priority alongside other societal-scale risks, such as pandemics and nuclear war.

Dangers of Artificial Intelligence

In 2018, a self-driving Uber car hit and killed a pedestrian.

In 2022, scientists reconfigured an AI system originally designed for creating non-toxic, healing molecules to produce chemical warfare agents. By changing the system's settings to reward toxicity instead of penalizing it, they were able to quickly generate 40,000 potential molecules for chemical warfare in just six hours.

In 2023, researchers demonstrated how GPT-4 could manipulate a TaskRabbit worker into completing Captcha verification. More recently, a tragic incident was reported in which an individual took their own life after an unsettling conversation with a chatbot.

The use of AI systems, regardless of their intended purpose, can lead to negative consequences, such as:

- Automation-spurred job loss

- Deepfakes and misinformation

- Privacy violations

- Unclear legal regulation

- Algorithmic bias caused by bad data

- Financial crises

- Cybercrimes

- Weapons automatization

- Uncontrollable superintelligence

Artificial intelligence systems are becoming increasingly powerful, and we don't know their limitations. These systems could be used for malicious purposes. Let's examine the various risks more closely.

Job Losses Due to Automation by AI

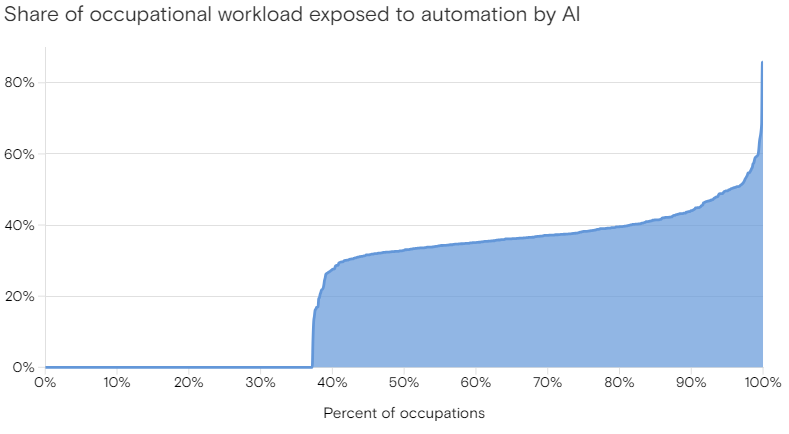

According to research conducted by Goldman Sachs, artificial intelligence may significantly impact employment markets worldwide. By analyzing databases that detail the task content of over 900 occupations in the US and 2000 occupations in the European ESCO database, Goldman Sachs economists estimate that roughly two-thirds of occupations are exposed to some degree of automation by AI.

The vertical axis shows Share of occupational workload exposed to automation by AI. The horizontal axis shows Percent of occupations.

Changes in workflows caused by these advancements could potentially automate the equivalent of 300 million full-time jobs. However, not all of this automated work will lead to layoffs. Many jobs and industries are only partially susceptible to automation, meaning they are more likely to be complemented by AI rather than replaced entirely.

Seo.ai takes this prediction even further, estimating that around 800 million jobs globally could be replaced by artificial intelligence by 2030. To prepare for this upcoming shift, it is expected that over the next three years, more than 120 million workers will undergo retraining.

If you want to know which professions are more susceptible to automation and which ones are less threatened by automation, check out our article on the topic.

Misinformation

Even the most advanced large language models are susceptible to generating incorrect or nonsensical information. These errors (hallucinations) are often a result of the model's reliance on statistical patterns in the data it has been trained on rather than true understanding or reasoning.

In other words, chatbots can sometimes make up facts. This was made clear in 2023, when a New York attorney got in hot water for using ChatGPT to conduct legal research for a personal injury case. He put together a 10-page brief, referencing several previous court decisions, all of which were proven to be completely fabricated by the chatbot. As a result, the attorney and a colleague were sanctioned by a federal judge and fined $5,000 each.

In 2024, yet another New York lawyer was disciplined for citing a non-existent case generated by artificial intelligence.

Another example is Stack Overflow, a question and answer website used primarily by programmers and developers to ask technical questions, seek help with coding problems, and share knowledge within the programming community.

The site had to ban all use of generative AI, because the average rate of getting correct answers from various chatbots was too low even though the answers typically looked convincing.

Social Manipulation

Social media platforms are flooded with so much content these days, it can be overwhelming to keep up with it all. That's where algorithmic curation comes in. It essentially helps sift through all the noise and present users with content that is most likely to interest them based on past behavior. While this can be helpful in managing the endless stream of information, it also means that the platform has a lot of control in shaping what users see and interact with.

However, changing what shows up on someone's news feed can impact their mood and how they view the world in general. In January 2012, Facebook data scientists demonstrated how decisions about curating the News Feed could change the happiness level of users. The events of January 2021 at the US at the US Capitol further highlighted how someone's social media consumption can play a role in radicalization.

Additionally, because sensational material tends to keep users hooked for longer periods of time, algorithms may inadvertently steer users towards provocative and harmful content in order to increase engagement. Even suggesting content based on a user's interests can be problematic, as it can further entrench their beliefs within a "filter bubble" rather than exposing them to diverse perspectives. This can ultimately result in increased polarization among users.

When we hand over our decision-making power to platforms, we are essentially giving them control over what we see. Social media, with its advanced algorithms, excels at targeted marketing by understanding our preferences and thoughts. Recent investigations are looking into the role of Cambridge Analytica and similar firms in using data from 50 million Facebook users to influence major political events like the 2016 U.S. Presidential election and the U.K.’s Brexit referendum. If these allegations are proven true, it highlights the potential of AI to manipulate society. A more recent example is Ferdinand Marcos, Jr. using a TikTok troll army to sway younger voters in the 2022 Philippine presidential election. By leveraging personal data and algorithms, AI can effectively target individuals with specific propaganda, whether it's based on facts or fiction.

Deepfakes

Deepfakes refer to digitally altered videos or images that realistically depict an individual saying or doing something they never actually said or did. This technology uses deep learning algorithms to manipulate existing video and audio footage to create convincing fake content.

“No one knows what’s real and what’s not,” futurist Martin Ford said. “So it really leads to a situation where you literally cannot believe your own eyes and ears; you can’t rely on what, historically, we’ve considered to be the best possible evidence... That’s going to be a huge issue.”

One of the main reasons why deepfakes are considered dangerous is their potential to be used for malicious purposes. For example, deepfakes could be used to create fake video evidence in legal cases, frame individuals for crimes they didn't commit, or even impersonate a political figure to spread false information. By manipulating media in this way, deepfakes have the power to disrupt trust in traditional sources of information and sow confusion and discord in society.

According to DeepMedia, a company working on tools to detect synthetic media, 500,000 deepfakes were posted on social media sites globally in 2023. That’s 3 times as many video deepfakes and 8 times as many voice deepfakes compared 2022.

Some recent examples of ill-intent use of deepfakes include the creation of fake celebrity pornography, where faces of celebrities are digitally inserted into pornographic videos without their consent. Additionally, there have been instances of deepfake videos being used to manipulate stock prices, defame individuals, or spread political propaganda. These examples highlight the potential for deepfakes to be used for harmful and deceptive purposes.

Cybercrime

Cybercrime encompasses a wide variety of criminal activities that use digital devices and networks. These crimes involve the use of technology to commit fraud, identity theft, data breaches, computer viruses, scams, and other malicious acts. Cybercriminals exploit weaknesses in computer systems and networks to gain unauthorized access, steal sensitive information, disrupt services, and cause harm to individuals, organizations, and governments.

Adversaries are increasingly utilizing readily available AI tools like ChatGPT, Dall-E, and Midjourney for automated phishing attacks, impersonation attacks, social engineering attacks, and fake customer support chatbots.

According to the SlashNext State of Phishing Report 2023, there has been a 1265% surge in malicious phishing emails, largely attributed to the use of AI tools for targeted attacks.

Impersonation attacks are becoming increasingly common. Scammers are using ChatGPT and other tools to impersonate real individuals and organizations, engaging in identity theft and fraud. Similar to phishing attacks, they utilize chatbots to send voice messages posing as a trusted friend, colleague, or family member in order to obtain personal information or access to accounts. In a notable case from March 2019, the head of a UK subsidiary of a German energy company fell victim to a fraudster who emulated the CEO's voice, leading to a transfer of nearly £200,000 ($243,000) into a Hungarian bank account. The funds were later moved to Mexico and dispersed to multiple locations. Investigators haven’t identified any suspects.

In 2023, the Internet Crime Complaint Center (IC3) received an unprecedented number of complaints from the American public: a total of 880,418 complaints were filed, with potential losses exceeding $12.5 billion. This signifies a nearly 10% increase in the number of complaints received and a 22% increase in losses compared to 2022. Despite these staggering figures, it is important to note that they likely underestimate the true extent of cybercrime in 2023. For example, when the FBI recently dismantled the Hive ransomware group, it was discovered that only about 20% of Hive's victims had reported the crime to law enforcement.

Invasion of Privacy

A prime example of social surveillance is China’s use of facial recognition technology in offices, schools and other venues. This technology not only enables tracking of individuals' movements, but also potentially allows the government to collect extensive data for monitoring their actions, activities, relationships and ideological beliefs.

Individuals can now be monitored both online and in their daily lives. Each citizen is evaluated based on their behaviors, such as jaywalking, smoking in non-smoking areas, and time spent playing video games. Imagine that each action affects your personal score within the social credit system.

When Big Brother is watching you and then making decisions based on that information, it’s not only an invasion of privacy but can quickly turn to social oppression.

Financial Crises

In today's financial world, the use of machine learning algorithms is widespread, with hedge funds and investment firms heavily relying on these models to analyze stocks and assets. These algorithms are constantly fed with huge amounts of traditional and alternative data to make trading decisions. However, there is a growing concern that algorithmic trading could potentially trigger the next major financial crisis.

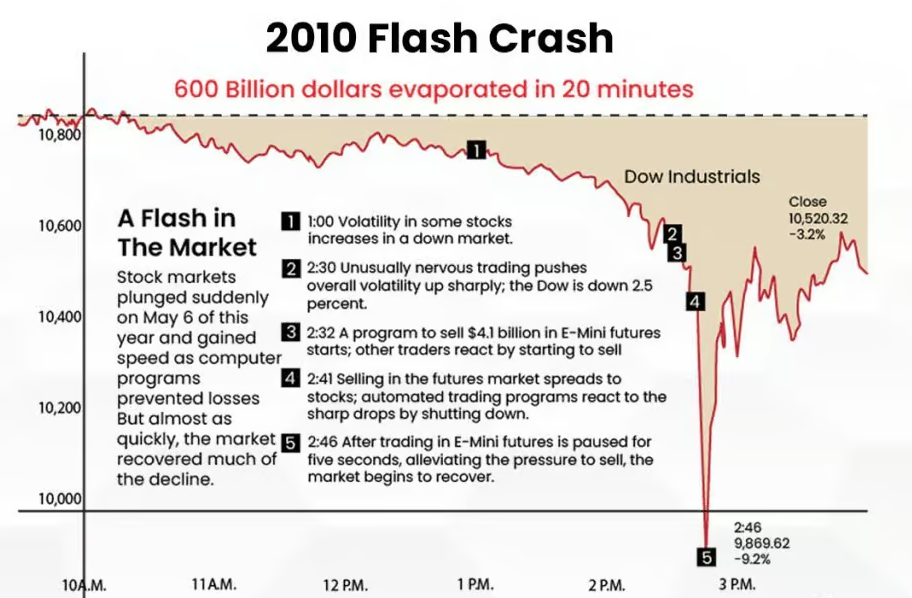

2010 Flash Crash. $600 billion evaporated in 20 minutes

One notable example of the dangers of faulty algorithms is the 2010 Flash Crash, where the stock market suddenly plunged nearly 1,000 points in a matter of minutes before quickly rebounding. Although the market indices managed to partially rebound in the same day, the Flash Crash erased almost $1 trillion in market value. This sudden and drastic drop in prices was largely attributed to automated trading algorithms reacting to market conditions in an unpredictable manner. Another instance was the Knight Capital Flash Crash in 2012, where a malfunctioning algorithm caused the firm to lose $440 million in just 45 minutes, ultimately leading to its demise.

These crashes serve as sobering reminders of the potential risks posed by algorithmic trading in the financial markets. When algorithms are not properly designed, tested, or monitored, they can have catastrophic consequences. It is crucial for financial institutions to thoroughly vet their algorithms and ensure proper risk management practices are in place to prevent similar disasters from occurring in the future.

Killer Robots

Autonomous weapons powered by artificial intelligence (AI) have long been a topic of debate and concern among governments, military officials, and human rights advocates. These systems, also known as "killer robots" or "lethal autonomous weapons," have the ability to independently select and engage targets without human intervention. This raises significant ethical, legal, and security concerns, as these weapons have the potential to make life or death decisions without human oversight.

The development of autonomous weapons has accelerated in recent years, as AI technology has become more advanced and widespread. These weapons can range from unmanned drones to ground-based systems that can autonomously identify and attack targets. Proponents of autonomous weapons argue that they can reduce human casualties in conflict zones and provide more precise and efficient military operations. However, critics argue that these systems raise serious ethical questions and can have unintended consequences, such as escalation of conflicts and civilian casualties.

The danger posed by autonomous weapons powered by AI is very real. These systems have the potential to be hacked or malfunction, leading to unintended consequences and loss of control. Additionally, the lack of human oversight in decision-making raises concerns about accountability and the potential for violations of international humanitarian law.

In 2020, over 30 countries called for a ban on lethal autonomous weapons, citing concerns about the potential for machines to make life or death decisions. Despite these concerns, the development and deployment of autonomous weapons powered by AI continue to progress. Countries like the United States, Russia, China, and Israel are known to be investing heavily in these technologies. In the US, the Department of Defense has been developing autonomous weapons systems, including semi-autonomous drones and unmanned ground vehicles.

Uncontrollable Superintelligence

Artificial intelligence surpasses the human brain in various ways including speed of computation, internal communication speed, scalability, memory capacity, reliability, duplicability, editability, memory sharing and learning capabilities:

- AI operates at potentially multiple GHz compared to the 200 Hz limit of biological neurons.

- Axons transmit signals at 120 m/s, while computers do so at the speed of electricity or light.

- AI can easily scale by adding more hardware, unlike human intelligence limited by brain size and social communication efficiency.

- Working memory in humans is limited compared to AI's expansive memory capacity.

- The reliability of transistors in AI surpasses that of biological neurons, enabling higher precision and less redundancy.

- AI models can be easily duplicated, modified, and can learn from other AI experiences more efficiently than humans.

One day AI may reach a level of intelligence that far surpasses that of humans, leading to what is known as an intelligence explosion.

This idea of recursive self-improvement, where AI continually improves itself at an exponential rate, has sparked concerns about the potential consequences of creating a superintelligent entity. Imagine a scenario where AI reaches a level of intelligence that allows it to outthink and outperform humans in every conceivable way. This superintelligence could potentially have the power to make decisions that greatly impact our society and way of life. Just as humans currently hold the fate of many species in our hands, the fate of humanity may one day rest in the hands of a superintelligent AI.

Overreliance on AI and Legal Responsibility

Relying too heavily on AI technology could result in a decrease in human influence and functioning in certain areas of society. For example, using AI in healthcare may lead to a decrease in human empathy and reasoning. Additionally, utilizing generative AI for creative pursuits could stifle human creativity and emotional expression. Excessive interaction with AI systems could also lead to a decline in peer communication and social skills. While AI can be beneficial for automating tasks, there are concerns about its impact on overall human intelligence, abilities, and sense of community.

Furthermore, there are potential dangers that could result in physical harm to humans. For instance, if companies rely solely on AI predictions for maintenance schedules without other verification, it could result in machinery malfunctions that harm workers. In healthcare, AI models could lead to misdiagnoses.

In addition to physical harm, there are non-physical ways in which AI could pose risks to humans if not properly regulated. This includes issues with digital safety such as defamation or libel, financial safety like misuse of AI in financial recommendations or credit checks, and equity concerns related to biases in AI leading to unfair rejections or acceptances in various programs.

And, when something goes wrong, who should be held accountable? Is it the AI itself, the developer who created it, the company that put it into use, or is it the operator if a human was involved?

* * *

In conclusion, while artificial intelligence does come with many risks and threats, it also has the potential to greatly benefit society and improve our lives. It is important to recognize that the good often outweighs the bad when it comes to AI technology. In our next article, we will discuss strategies for mitigating the risks associated with AI, ensuring that we can fully harness its potential for positive change.