Managing the Risks of Artificial Intelligence for a Safer Future

Technologies based on artificial intelligence are quickly making their way into all aspects of life: medicine, education, finance, social media, autonomous vehicles, programming, etc. Progress cannot be halted, so the impact of AI will only continue to expand each year. In our previous article we examined the risks of artificial intelligence, and now we will explore how the negative factors associated with the use of AI can be reduced.

AI detection tools

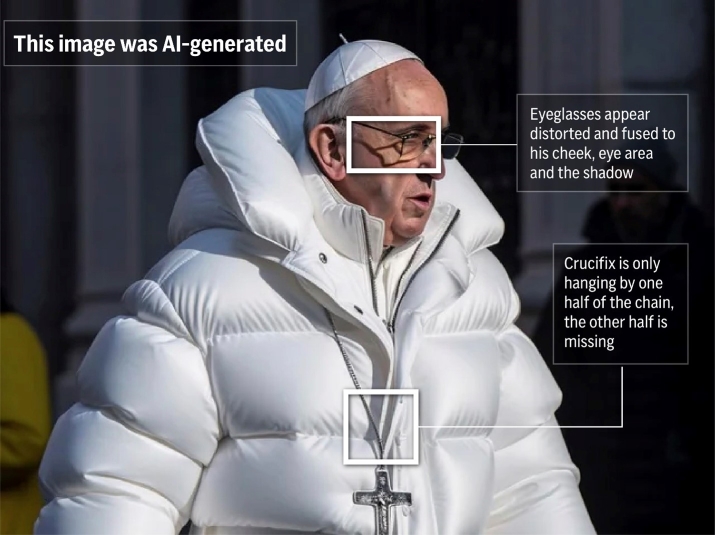

With the aid of modern technologies, it is possible to fabricate audio, photos, and videos, create deepfakes, manipulate public opinion, imitate another person's voice, and falsify evidence.

Researchers are now working on ways to detect forgeries, allowing them to determine whether an audio or video recording has been altered or entirely generated by artificial intelligence.

Detecting generated images

- Intel created the first-ever real-time deepfake detection platform based on FakeCatcher technology. The technology uses remote photoplethysmography techniques to analyze the subtle “blood flow” in the pixels of an image. The signals from multiple frames are being processed through a classifier to determine whether the video in question is real or fake.

- The U.S. government agency DARPA is working on SemaFor technologies (short for Semantic Forensics) that can accurately detect synthetic artificially generated images.

Besides, there are already dozens of different tools aimed to detect generated images and text.

Critical thinking

Disinformation, propaganda, and various methods of deception have been around long before the invention of artificial intelligence. Perhaps you or someone you know has been targeted by scammers. Some people are easily deceived by schemes like the "Nigerian prince" inheritance email, while others are more skeptical and think critically. With the rise of technology that can manipulate images, audio, and video, we must now be even more diligent in fact-checking.

Futurist Mark Ford said: “No one knows what’s real and what’s not. So it really leads to a situation where you literally cannot believe your own eyes and ears; you can’t rely on what, historically, we’ve considered to be the best possible evidence.”

Countering scam and misinformation will be a cyclical process: as new ways to detect deception emerge, others will develop ways to counteract them, leading to the creation of even more safeguards. Although it may not offer complete protection, we will not be defenseless.

Fake photo of Pope Francis

Creating new jobs

The widespread adoption of artificial intelligence comes with the risk of job loss. We discussed this problem in detail in one of the previous articles. There you can also find links to studies, find out which professions are most at risk and which are not at risk.

Of course, many people will lose their jobs due to artificial intelligence (according to various estimates, from 100 to 800 million people), and some will have to undergo professional retraining (Seo.ai estimates their number at 120 million people).

However, AI will not only take away jobs, but also create new ones. Moreover, a scenario is possible when new jobs appear faster than old ones disappear. Here's what the World Economic Forum writes about it:

Although the number of jobs destroyed will be surpassed by the number of ‘jobs of tomorrow’ created, in contrast to previous years, job creation is slowing while job destruction accelerates. Based on these figures, we estimate that by 2025, 85 million jobs may be displaced by a shift in the division of labour between humans and machines, while 97 million new roles may emerge.

Among those emerging new jobs are:

- AI Model and Prompt Engineers

- Interface and Interaction Designers

- AI Content Creators

- Data Curators and Trainers

- Ethics and Governance Specialists

Some of the new jobs

Embracing the technology

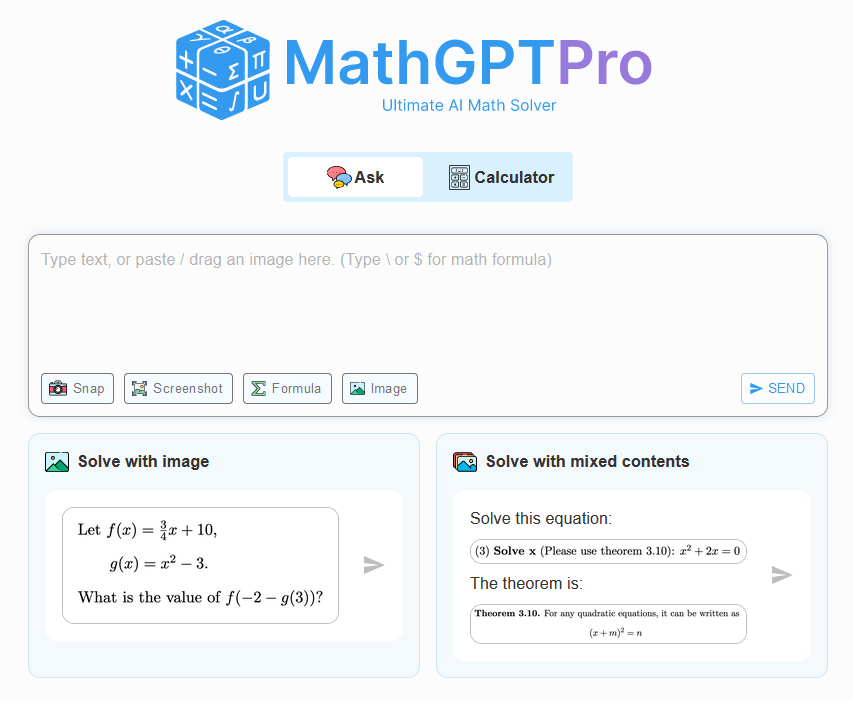

Some teachers are concerned that students may not develop their writing skills because AI will do the work for them. A similar situation was in the 1970s and 1980s, when electronic calculators became widespread. Math teachers worried that students would no longer learn basic arithmetic.

There have always been opponents of progress. Thousands of years ago, in Ancient Greece, Socrates spoke out against writing itself:

[Writing] will create forgetfulness in the learners’ souls, because they will not use their memories; they will trust to the external written characters and not remember of themselves. The specific which you have discovered is an aid not to memory, but to reminiscence, and you give your disciples not truth, but only the semblance of truth; they will be hearers of many things and will have learned nothing; they will appear to be omniscient and will generally know nothing; they will be tiresome company, having the show of wisdom without the reality.

I'm not as ancient to remember Ancient Greece, but my math teacher used to say, "You won't always have a calculator in your pocket." And guess what? I do have a calculator in my pocket. It’s an application on my phone.

Progress cannot be stopped. Sooner or later, people will have to embrace artificial intelligence-based technologies. AI will become as common a tool as a calculator.

MathGPT Pro

At this point, we need to remember that chatbots are imperfect, prone to hallucinations, and the information generated needs to be carefully checked for accuracy. However, working with sources and double-checking facts is commonplace in educational or research work. And over time, chatbots will become better and more reliable - we can see this in the evolution of ChatGPT, from GPT-2 to GPT-4.

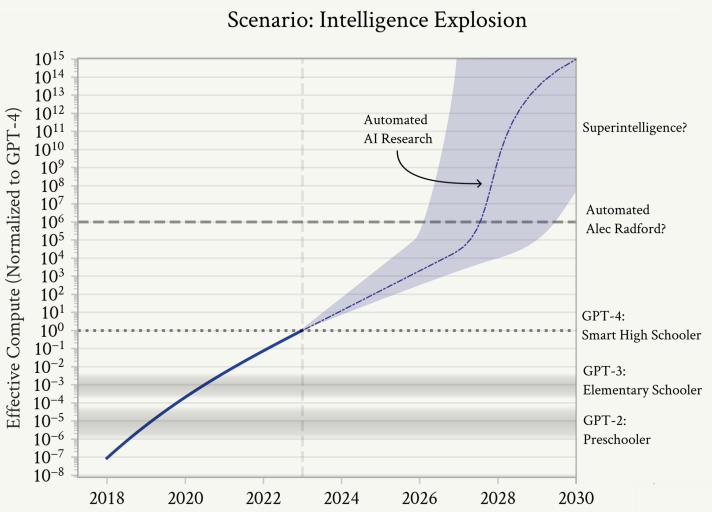

Scalable oversight

As AI systems become more powerful and complex, it becomes increasingly challenging to control them through human feedback. It can be slow or infeasible for humans to evaluate complex AI behaviors, especially when AI outperforms humans in a given domain. To detect when the AI's output is falsely convincing, humans need assistance or extensive time. Scalable oversight can reduce the time and effort needed for supervision.

According to Nick Bostrom, the development of superintelligence could potentially mitigate the existential risks posed by other advanced technologies like molecular nanotechnology or synthetic biology. Thus, prioritizing the creation of superintelligence before other potentially hazardous technologies could lower overall existential risk.

Developing superintelligence to oversee regular AI may seem like putting the cart before the horse, but superintelligence may not be far off. According to “Situational Awareness” by Leopold Aschenbrenner we could see superintelligence within 10 years from now.

Artificial intelligence explosion

Before we know it, we would have superintelligence on our hands - AI systems vastly smarter than humans, capable of novel, creative, complicated behavior we couldn’t even begin to understand - perhaps even a small civilization of billions of them. Their power would be vast, too. Applying superintelligence to research and development in other fields, explosive progress would broaden from just machine learning research; soon they’d solve robotics, make dramatic leaps across other fields of science and technology within years, and an industrial explosion would follow. Superintelligence would likely provide a decisive military advantage, and unfold untold powers of destruction. We will be faced with one of the most intense and volatile moments of human history.