Understanding ChatGPT settings: Temperature, Top P, Presence penalty, and Frequency penalty

The flexibility and customization options offered by ChatGPT's parameters make it a versatile tool for various tasks. By adjusting parameters such as temperature, Top P, Presence penalty, and Frequency penalty, users can fine-tune the model's output to suit their specific needs. Whether it's creative writing, generating accurate answers, or shaping the model's language style, understanding and utilizing these parameters can greatly enhance the utility and effectiveness of ChatGPT.

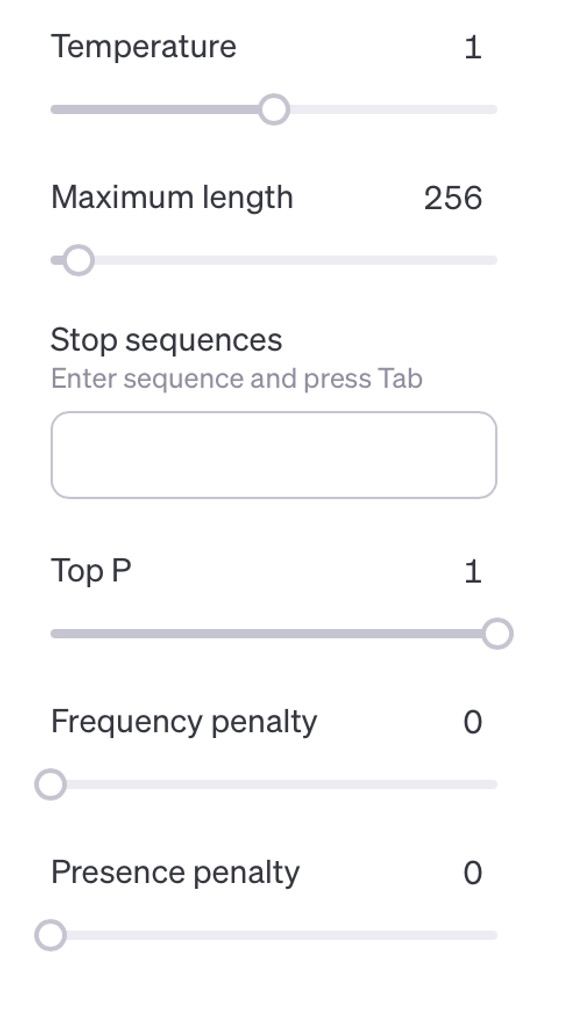

ChatGPT settings

Temperature

Temperature controls the randomness of the generated response. A higher temperature value increases randomness, making the responses more diverse and creative, while a lower value makes them more focused and deterministic.

For creative writing tasks or brainstorming ideas, a higher temperature value (e.g., 0.8-1.0) is often preferred to explore different possibilities. On the other hand, for fact-based queries or when generating precise answers, a lower temperature value (e.g., 0.2-0.5) is preferred to ensure more accurate and reliable responses.

Top P

The Top P parameter controls the diversity of the generated output by truncating the probability distribution of words. It functions as a filter to determine the number of words or phrases the language model examines while predicting the next word. For instance, when the Top P value is set at 0.4, the model only considers 40% of the most probable words or phrases.

Setting a higher Top P value (e.g., 0.9-1.0) ensures a broader range of options, resulting in more diverse responses. This can be useful for creative tasks where novelty is desired. Conversely, a lower Top P value (e.g., 0.1-0.5) limits the choices to the most probable ones, making the responses more focused and coherent.

What is the difference between Temperature and Top P?

Top P defines a range of tokens (words and symbols) that ChatGPT can use. When Top P = 1, the language model can use any token while generating a response. When Top P = 0.5, it can only use 50% of the most probable/appropriate/common options.

On the other hand, temperature determines the likelihood of ChatGPT selecting a particular token. With a temperature of 1, the bot will have equal probabilities for all available (within Top P bounds) options, whereas lower values will make it lean towards more frequently used words and phrases.

Optimal values for Temperature and Top P

The best temperature and Top P values for different tasks can vary depending on the specific requirements and preferences of the client or publication.

- For article writing, a lower temperature value (e.g., around 0.5-0.7) and a medium to high Top P value (e.g., around 0.8-0.9) can help generate more focused and coherent articles while still allowing for some creative input from the AI model.

- For product descriptions, a slightly higher temperature value (e.g., around 0.7-0.8) and a medium Top P value (e.g., around 0.7-0.8) can help create unique and engaging descriptions that stand out to potential customers.

- For language translation, a lower temperature value (e.g., around 0.5-0.7) and a medium to high Top P value (e.g., around 0.8-0.9) can help ensure accurate translations while maintaining a natural-sounding output.

- For virtual assistant tasks, a medium temperature value (e.g., around 0.7-0.8) and a medium to high Top P value (e.g., around 0.8-0.9) can help create interactive and helpful responses that are both informative and engaging.

- For content curation, a higher temperature value (e.g., around 0.8-0.9) and a low Top P value (e.g., around 0.2-0.4) can allow for more creativity and diversity in the curated content while still maintaining relevance and quality.

- Code generation tasks require precision and adherence to conventions. Setting a low temperature value between 0.1 and 0.5 can help ensure the generation of accurate and error-free code. It is recommended to use a lower Top P value of around 0.2 to minimize randomness and maintain conformity to established conventions.

Presence penalty

Both Presence penalty and Frequency penalty help to avoid repetition. They both penalize the use of the same words over and over again, but in slightly different ways. The Presence penalty penalizes tokens based on whether they appear in the generated text so far, irrespective of how often they occur.

This encourages ChatGPT to employ a more diverse vocabulary. The higher the Presence penalty value, the more pronounced the penalty becomes.

Frequency penalty

Frequency penalty penalizes tokens based on how often they appear in the text so far. If you notice the excessive use of the same words in the generated outcome, you may want to increase the value of this parameter.

Increasing Presence penalty is like telling ChatGPT not to use repetitive phrases or ideas, while increasing Frequency penalty is like telling not to use the same words too often.

Optimal values for Presence and Frequency penalties

For the purpose of moderately reducing repetitive samples, suitable penalty coefficients generally range from 0.1 to 1. However, if the goal is to significantly suppress repetition, the coefficients can be increased up to 2.

Nevertheless, it is important to note that this increase may result in a noticeable decrease in sample quality. Alternatively, negative values can be employed to intentionally enhance the likelihood of repetition.