What is OpenAI o1 and how this model is better than GPT-4o

On September 12, 2024, OpenAI, known for ChatGPT, introduced its new series of artificial intelligence models called OpenAI o1. In this article we will analyze: how OpenAI o1 differs from GPT-4o, what are its strengths and in what areas it can be used.

What is OpenAI o1?

It is a new family of chatbots, or, more accurately, language models based on artificial intelligence, designed to solve complex or particularly difficult tasks that require accuracy and logical thinking.

Currently, the o1 family includes:

- o1-preview – the main model (still in an early version, as indicated by the word "preview"),

- o1-mini – a lighter, faster model that is particularly effective at coding.

There is some symbolism in the name “o1” itself:

But for complex reasoning tasks this is a significant advancement and represents a new level of AI capability. Given this, we are resetting the counter back to 1 and naming this series OpenAI o1.

Differences from GPT-4o

OpenAI o1 is an alternative to GPT-4o, but not a direct replacement. Otherwise, the model would be called simply GPT-5.

Being at a relatively early stage of development, OpenAI o1 cannot yet do many of the things that GPT-4o can do. For example, it does not support uploading files and images.

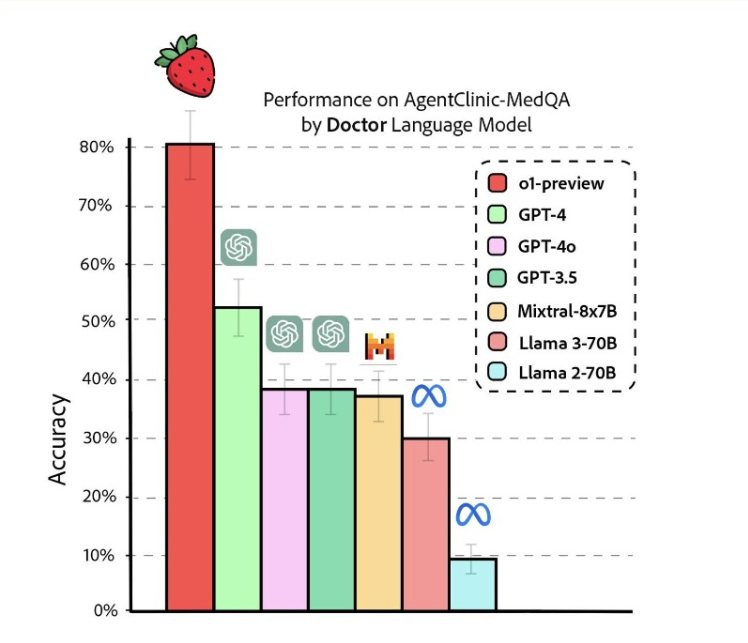

However, the o1 models excel in the accuracy of their responses, the consistency and logic of their reasoning, which allows them to be successfully applied in areas such as:

- Quantum physics,

- Genetics,

- Medicine,

- Software development.

OpenAI o1 does not simply generate an answer to a question, but builds a chain of reasoning. Due to this, the model may take longer to respond than other chatbots - typically 5-10 seconds, and in some cases up to 20-30 seconds. This is not so long as to become a real inconvenience. The careful consideration of responses makes the OpenAI o1 models less prone to hallucinations compared to their competitors. Hallucinations are when a chatbot makes up facts out of thin air, providing false information.

OpenAI o1's strengths and evaluations

Above we have already mentioned the strengths of OpenAI o1, such as the accuracy of responses and weak susceptibility to hallucinations. Now let's see how this all translates into numbers: what the o1 model scores in various tests.

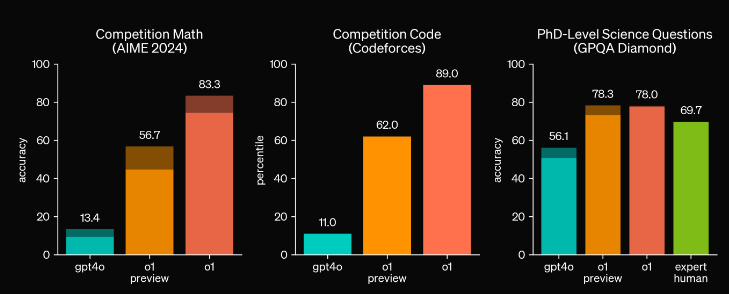

OpenAI o1 ranks in the 89th percentile on competitive programming questions (Codeforces), places among the top 500 students in the US in a qualifier for the USA Math Olympiad (AIME), and exceeds human PhD-level accuracy on a benchmark of physics, biology, and chemistry problems (GPQA).

From left to right: Competition math, Competition code, PhD-Level Science Questions

On the 2024 AIME exams, GPT-4o correctly solved only 13% of problems, while o1 scored 83%.

In the GPQA Diamond test, which includes PhD-Level Science Questions in physics, biology and chemistry, o1 models did even better than human experts. Previously, artificial intelligence has not been able to outperform humans in this test.

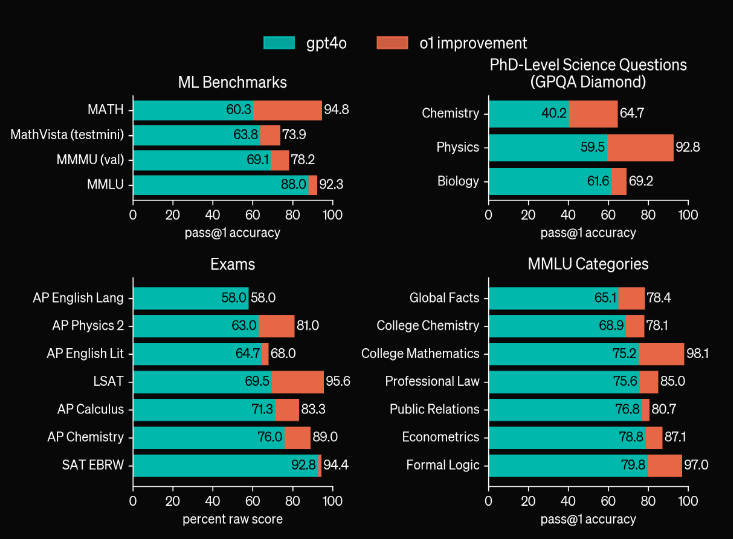

Turquoise: GPT-4o, Red: o1

The image above shows o1’s excellence in disciplines ranging from math to English literature. The MMLU test includes 57 categories. The o1 model won in 54 of them. Only 7 of them fit in the picture:

- Global Facts

- College Chemistry

- College Mathematics

- Professional Law

- Public Relations

- Econometrics

- Formal Logic

Interestingly enough, o1-mini performs better at coding than o1-preview, as both Codeforces and HumanEval benchmarks show:

Coding proficiency benchmarks

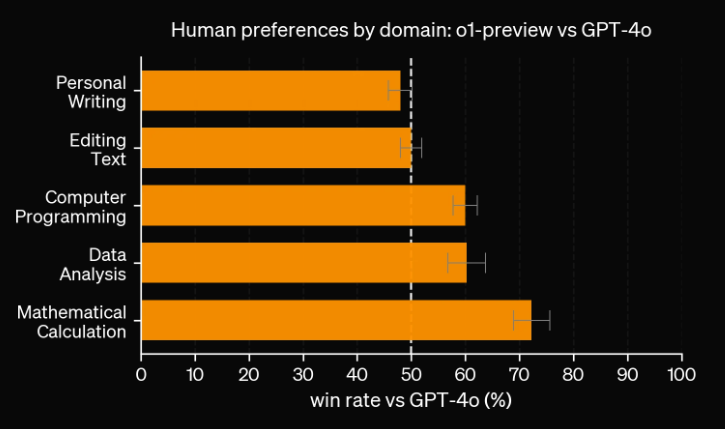

In addition to exams and academic benchmarks, OpenAI also evaluated human preference of o1-preview vs GPT-4o in:

- Personal Writing

- Editing Text

- Computer Programming

- Data Analysis

- Mathematical Calculation

In this evaluation, human trainers were shown anonymized answers from o1-preview and GPT-4o, and voted for which response they preferred.

o1-preview win rate vs GPT-4o (%)

o1-preview is preferred to GPT-4o by a large margin in reasoning-heavy categories like data analysis, coding, and math. However, o1-preview is not preferred on some natural language tasks like writing and editing text, suggesting that o1-preview model is not well-suited for all use cases.

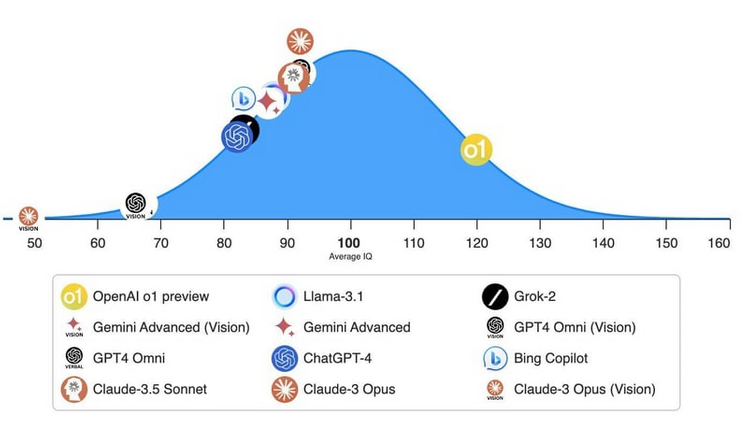

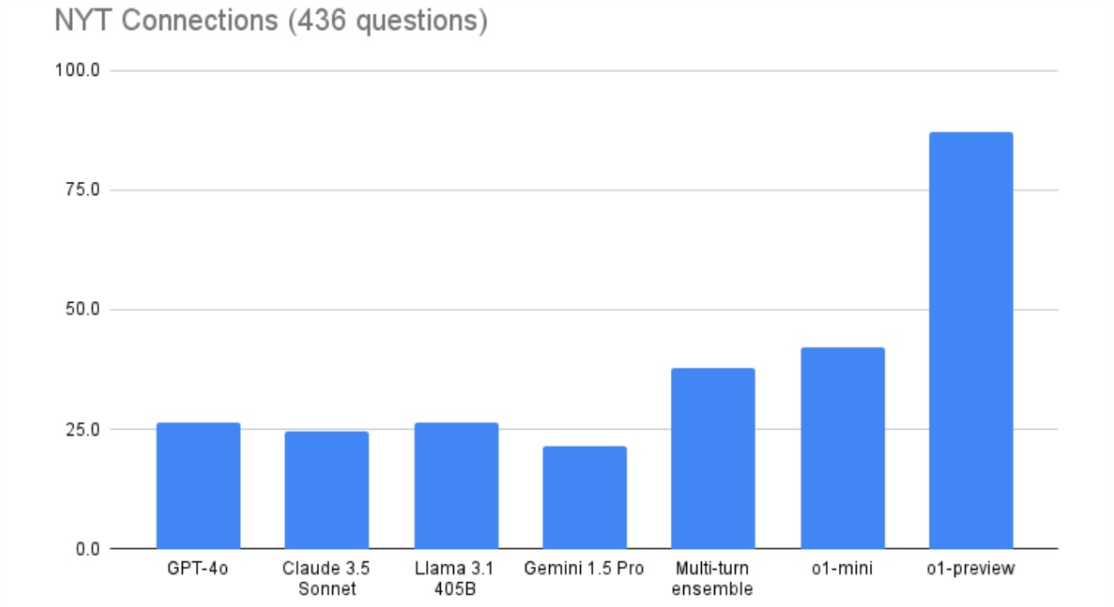

OpenAI o1 vs other large language models

OpenAI o1-preview is really smart. How smart? Based on the Norway Mensa test, it has an IQ of 120, which is significantly higher than other tested language models. The peak on the graph represents the average human IQ. Everything to the left of the peak is below average, while everything to the right is above average.

OpenAI o1 blows the competition out of the water, in major benchmarks and homemade tests alike.

You don’t even need to understand those tests. All you need is to see OpenAI o1 at the top.

Chain of thought

Models of the o1 series are trained to reason, building a chain of thoughts. Thus, the chatbot not only gives a ready-made answer, but also shows the path it took to get there. It can be very interesting to follow this path.

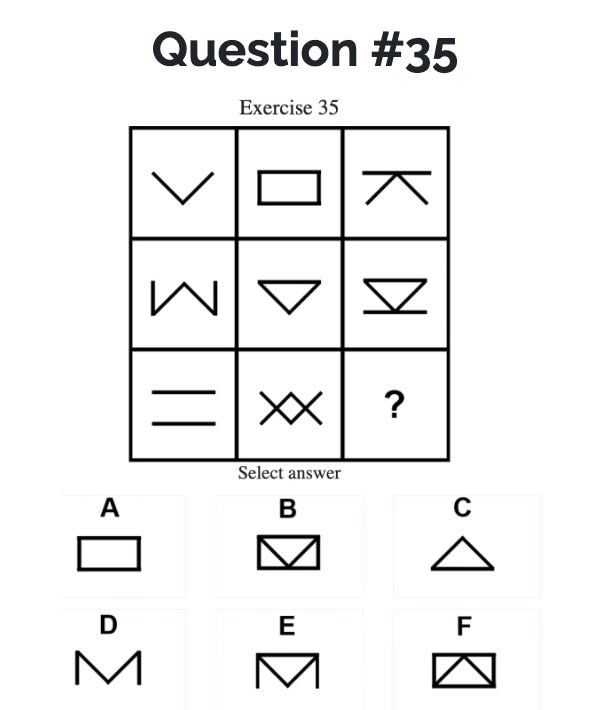

Let’s look at one of the harder questions from the IQ test mentioned earlier.

The bot picked Option D as the answer, and here’s how it explained it:

Option D — By analyzing the patterns in the grid, we see that each column combines certain components to include all elements (V, upside-down V, rectangle sides) by the third row. In the third column, components Rleft and Rright (the rectangle's sides) have not yet appeared. Therefore, the correct answer for the empty cell is Option D, which includes the normal 'v' and the two sides of the rectangle, completing the set of components for that column.

Now let’s look at a simpler puzzle (not from the IQ test):

Question: Mr. and Mrs. Smith and their two children form a typical American family. According to one of their more talkative neighbors: George and Dorothy are blood relatives. Howard is older than George. Virginia is younger than Howard. Virginia is older than Dorothy. If two and only two of these statements are true, what is the first name of each member of the family?

Chain of thought: To solve this puzzle, we need to determine the first names of each family member based on the given statements and the condition that exactly two statements are true. Let’s break down the information step by step.

Now the model lists the family members, their possible roles and names; analyzes the statements, finding out which of those are true and which are false. Then based on all that the model gives the final answer.

Answer: Mr. Smith is George, Mrs. Smith is Virginia, Son is Howard, Daughter is Dorothy.

Conclusion

The o1-preview model is capable of logical reasoning, which is necessary for solving complex scientific and mathematical problems. The o1-mini model is brilliant at writing code. However, both OpenAI o1 models are rather narrowly specialized tools, they are not as versatile in their applications as GPT-4o, and they are not suitable for many routine or creative tasks: working with texts, literary translation, editing. However, in their domain (mathematics, natural and exact sciences), the OpenAI o1 models are unparalleled.